This is not the Yosemite Firefall.

This moment never happened; this place does not exist.

I know that, because I wasn’t there – more importantly, nobody ever was.

It’s a scene that was “imagined” by a piece of code that scours the internet for sources in its never-ending quest for “learning”.

This is probably one of the fastest-ageing posts I’ll ever write, with some channels predicting AI to grow in power and abilities by over a thousand times in the next 3 years – faster than any technology we’ve previously seen as “scary”. Indeed, some countries (Italy) and platform owners (Elon Musk, until his latest u-turn) had found it so concerning they’ve attempted to block its adoption around the world.

But should we, specifically as landscape photographers, be concerned?

In this article (and it’s a long one!), we’re going to explore the impacts – both positive and negative – that AI could currently have on Landscape Photography, along with what that could mean for each of us in the long term.

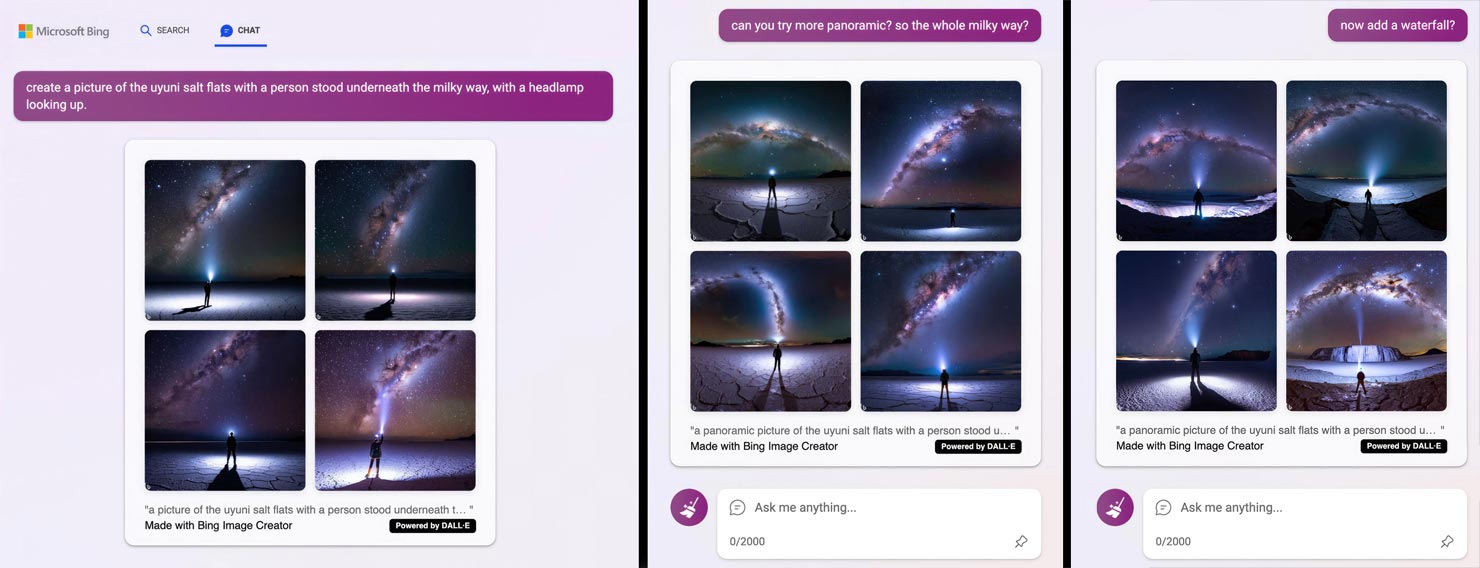

Included, you’ll find output demonstrations from Stable Diffusion/DALL-E type tools (such as Bing’s Image Creator and MidJourney), along with LaMDA and GPT (Generative Pre-trained Transformer) models such as Google’s Bard and OpenAI’s ChatGPT.

TL:DR – No, I don’t think it’s the end – and we’ll cover why… (chapter links):

- Creating New AI “Realities”

- Classic Scenes, Reimagined

- Unseen/Impossible Landscapes

- Blending Styles & Images with New Locations

- Behind-The-Scenes & Human Placement

- Unlikely Brand Collaborations

- Generating Headlines, Image Names & Blog Posts

- Using Tools for Planning & Itineraries

- Placing Prints onto Walls & Photography Tools

- Copyright Implications & Ethical Constraints

- So, Are We All Doomed?

So let’s get started…!

1. Creating New AI Realities

Give someone access to a text-to-image tool that can create “anything you can imagine” and peoples’ minds go, rather instantly, to some weird places.

I mean, who can resist generating a collection of 8 trolls driving racing cars?

Or a collection of rats holding specific yoga poses? (Arguably better than I can…)

But this is the reality of AI – it’s not reality, and that in itself provides not only a great escape in our minds, but a creative output for our thoughts that would otherwise be trapped inside with no ability for (most of us) to express them visually.

And this is where many of us saw the first explosion of AI – the “stuff that came out of peoples heads” was truly enlightening; the fact a computer could now help put those thoughts into some sort of image-based reality in seconds? Mind-blowing.

We could ask the computer to recreate London, as if it were an island – and it would get it almost right…

I mean, it’s clearly not London – it’s London-esque – and that’s one of the challenges with all of this technology: It’s often not quite correct.

Whether that’s “for now”, is up for debate, but as it stands – that same huge benefit that the AI tools gain in using any and all resources out there to learn how to do things, also means they’re using ANY and ALL resources to learn and create images – some good, some bad.

Will it refine, over time? Most definitely.

Will it get to a picture-perfect place, without regard for trademarks, copyright (more on that later) and factual accuracy? Who knows, but probably.

Certainly if you take the latest Midjourney 5 update and compare its outputs to those in previous versions – “realism” already seems to have become the goal in all of this and it’s advancing, rapidly.

But for now, if you want to imagine you were taking photos of the last remaining unicorn as it leaps across the sky in front of a perfectly placed Milky Way over the top of some mountains…

…the AI world is your oyster.

2. Classic Scenes, Reimagined

Ah, the Yosemite Firefall (well, the photographic waterfall-phenomenon one, not the actual firewall that stopped many years ago due to safety fears…).

It’s a honeypot for photographers who travel from far and wide to hopefully get the chance to capture this incredible scene that relies on a perfect sunset, aligned with perfect cloud conditions, plus the right melting temperature to work in conjunction with a perfect snowfall in the previous season.

With so many variables, the sense of achievement and wonder that I witnessed from the group of photographers around me was palpable as I joined them to capture it a few years back.

And as landscape photographers, that’s the point, right?

To BE there, in that moment, so you can remember how it felt witnessing nature create spectacular scenes.

OR…

We can sit at our desks and type “/imagine yosemite firefall in the snow with partly cloudy sky and golden tones at sunset –aspect 16:9” into Midjourney and save ourselves the bother.

How utterly sad, as a landscape photographers, if that’s the decision we made instead.

Is it wrong? Of course not – in the same way painters aren’t wrong for painting fantasy scenes with their brushes.

Is it unethical? I’d say as long as it’s flagged as an AI generated image, and doesn’t pretend to be a moment of reality that actually happened, then no – not at all.

But is this what I believe landscape photography is all about? Just the output, without a care for reality and the process of capturing a scene that we saw?

No – at the heart of it, it’s really not.

Maybe it’s just me, but one of the biggest reasons I love photographing landscapes and cityscapes is that it means I get to explore.

New countries, new cities, new mountains – and when I get there, to hopefully see something special that inspires me to capture it as a memory both in my head and in my camera.

Inherently, within that process, is risk. I’ve been on more than my fair share of “write-off” trips in the past – but that’s what drives me to return, to try again, to keep exploring.

And if we take that part of the process away; if we distill photography down into purely its output, with no regard for the process, I worry about the clinical world we’re heading towards.

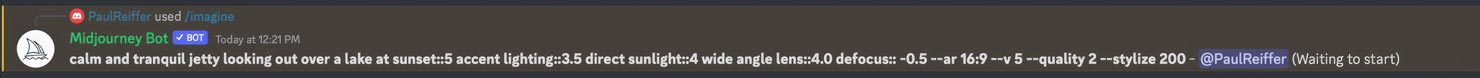

Why bother researching, travelling, being up at stupid-o-clock, or hunting that perfect still evening on a jetty over and over again if we can just type some keywords into an AI-bot to do the work for us?

After all, we don’t need to actually be there to “get the shot” any more, right?

But those jetties don’t exist.

You can’t “inspire someone to want to go there”, or “bring back the memories that someone has of those places”.

Sure, they’re based on jetties that someone once did capture in real life, but you can’t go to these locations, you can’t see them, feel them, experience them, and never will.

(Sidenote of irony, that if all the images out there start being based on AI’s output, then AI is only then learning from fantasy itself – so where does that end up…?)

The reality check doesn’t go unnoticed either, once we start asking for specifics to be “imagined”.

Touching on the copyright and trademark issue, as certain buildings and scenes are indeed protected, AI can struggle to produce certain well-known landmarks, even when fed exact prompts, unless seeded with specific images to blend.

In this request, it looks like The Shard, and it looks like the perfect level of fog I asked for – but is it the famous London landmark? No…

And that “learning” (scraping) that AI has become so reliant on, doesn’t always work out too well.

Just like with a recipe, a meal is only as good as the ingredients you put into it. So imagine what AI creates when it’s asked for a wide-angle image of Horseshoe Bend at sunset if it uses some of the ultra-HDR’d images out there as its base.

That’s right – something even more ultra-HDR’d as a result. (The above being Midjourney’s first attempt)

Some platforms such as Bing’s Image Creator produced a slightly less offensive output, but tended to go the other way – delivering a cartoon effect instead (a bias it seems to sustain):

And that’s the challenge we’re going to face as AI operators – feeding the right sources, prompts and parameters into these systems to give us the actual result we’re looking for.

All that said, the premise and tools are now sat there waiting to be called upon – you can take any scene you can think of – classic viewpoints or otherwise, and reimagine it through the power of AI image generation.

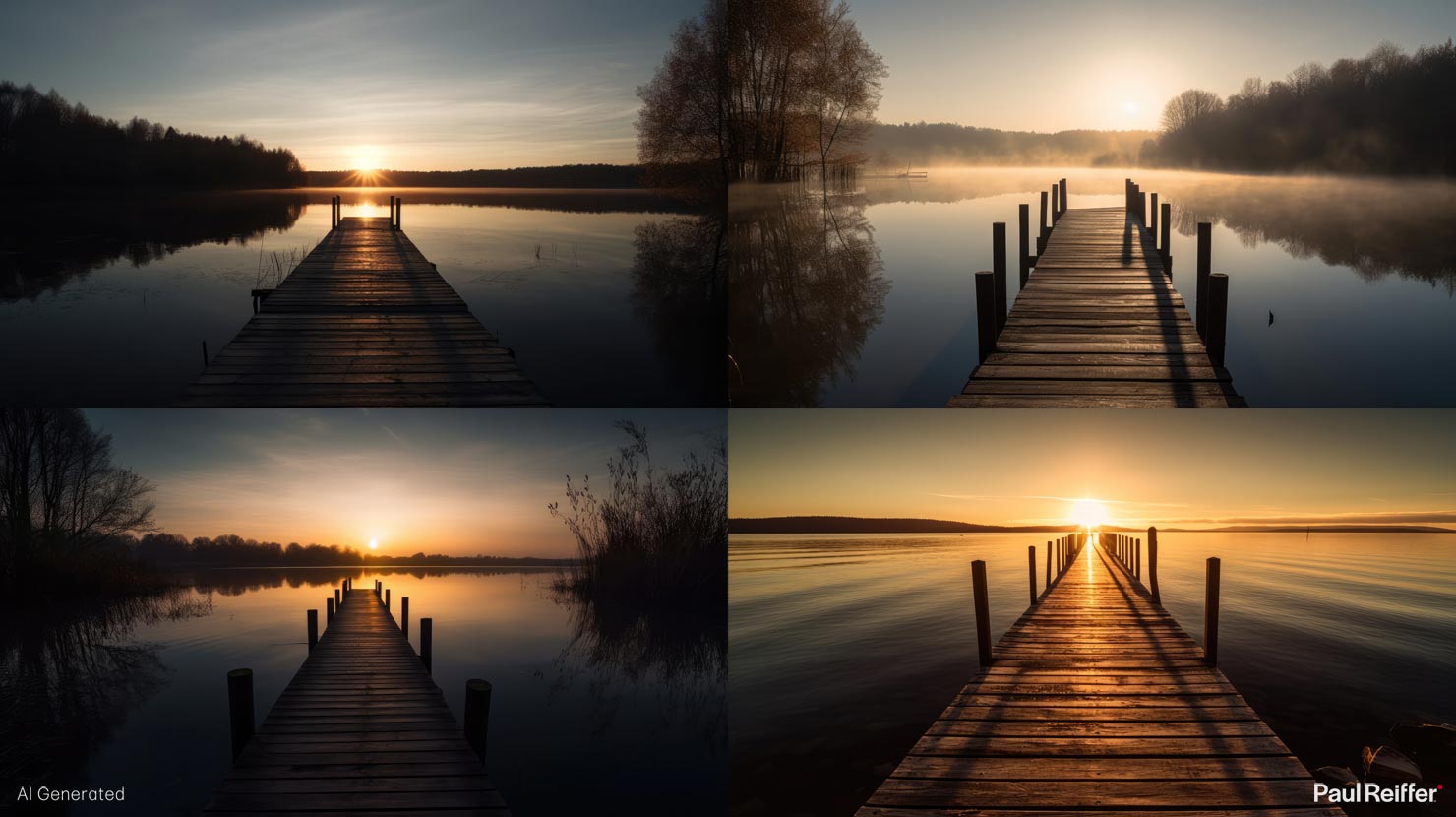

Want some options of a Milky-way-ish-cluster above the Uyuni Salt Flats in Bolivia? Sure.

Want to add a waterfall, and the full galaxy into the one single shot? No problem.

Maybe you went to Iceland a few years back and didn’t manage to see the Northern Lights over Kirkjufellsfoss during your trip? Well that doesn’t matter any more – you can just create the scene you missed.

And don’t worry – it’s still as “unique” as ever – no two outputs will be the same from these systems, so you can be assured that the image you now have is special, it’s yours, it’s… fake.

And that, sadly, is the issue here, to my mind.

I can sit at my desk and create all of these incredible scenes, images, “photographs” (cough) – but I have NO feelings, towards any of them.

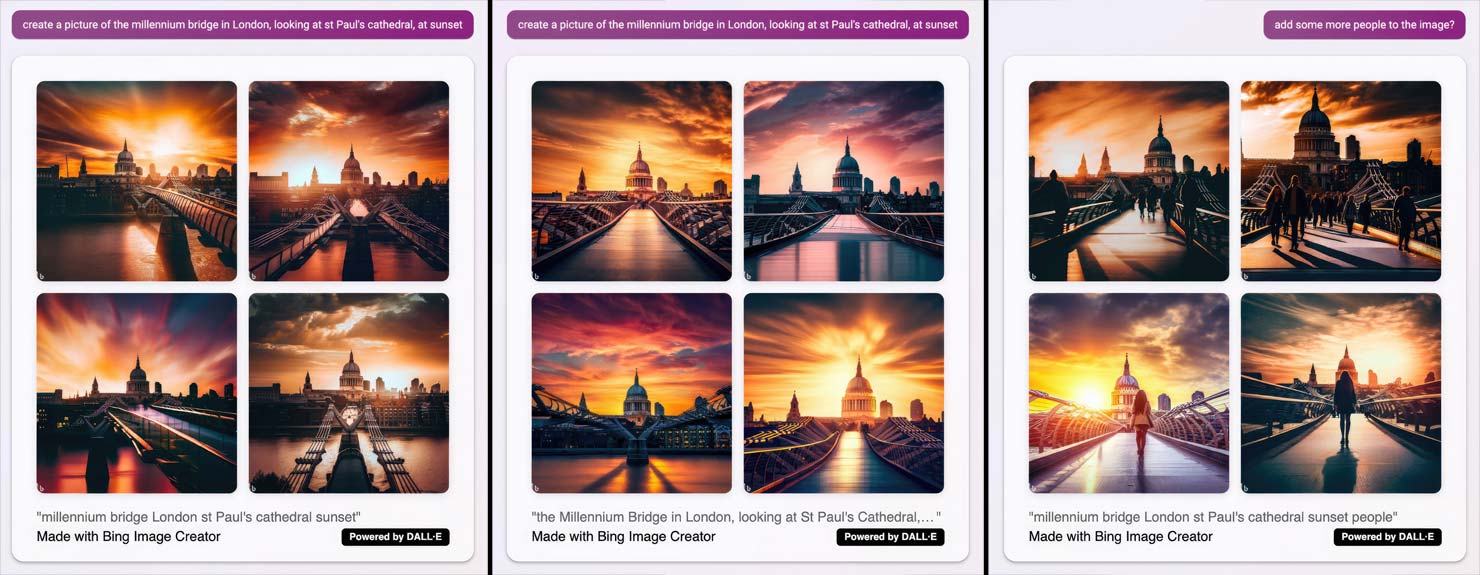

These moments on the Millennium Bridge in London spark no real, deep-rooted emotion in me, because I know they never happened.

Sure, I can add in some humans (nothing quite so emotive as telling a “bot” to “add human”!), in order to bring a sense of realism to it, but it’s still a place, a point in time, a scene, that never existed.

And all those issues aside, while it will improve over time (of that I’m certain), for now it’s still delivering images that are not quite there, or possible, or sensible as final results:

So do I believe AI is the end of Landscape Photography as we know it?

It entirely depends on how you see Landscape Photography as an endeavour:

If your only reason for “getting out there” is to bring back the shot – the output – the result, then arguably AI can replace that as a task. After all, if it’s all about the end image and not the process, why would anyone not want to fast-track to that finish line with the least amount of effort possible?

However, if your goal of capturing images is as much about the journey, the memories, the experience of being there at that moment in time; the failures (and therefore euphoria at successes); the enjoyment of being present in the incredible world that surrounds us in real life…?

Well, that’s something AI will never be able to deliver.

3. Unseen/Impossible Landscapes

Those earlier variations of Horseshoe Bend that Midjourney delivered sparked a thought in my mind.

While 3 out of 4 of them were truly horrendous, one of the shots stood out as an “alternative view” – not the classic scene – from down at the water level of the Colorado River.

Ultimately, while AI doesn’t necessarily deliver reality, for those of us searching for the impossible, it does indeed open a door into a whole new world.

Of course, the AI systems haven’t been to Horseshoe Bend, so have no baseline of what’s possible to see out there – but that’s sometimes the point in a creative world: To explore the impossible.

So, asked to create variations on “Horseshoe bend seen from the water line at the Colorado River” (to save me the hike and kayak trip, of course), it came up with some interesting takes on the idea.

Ask it to refine one of the concepts, tweak a little, upscale, and you get the following result:

And to be fair – given I haven’t been there, down at the river level, I could be convinced this is what it looks like. Effectively, I’m in the same boat (pun intended) as the AI tools; with no direct knowledge, I accept what my eyes are seeing as reality, as long as it appears to be real.

And in this case, I’d say it does.

But creating scenes I can’t easily get to is only one way of interpreting the idea of “impossible landscapes” – so why not do as others are and push it even further?

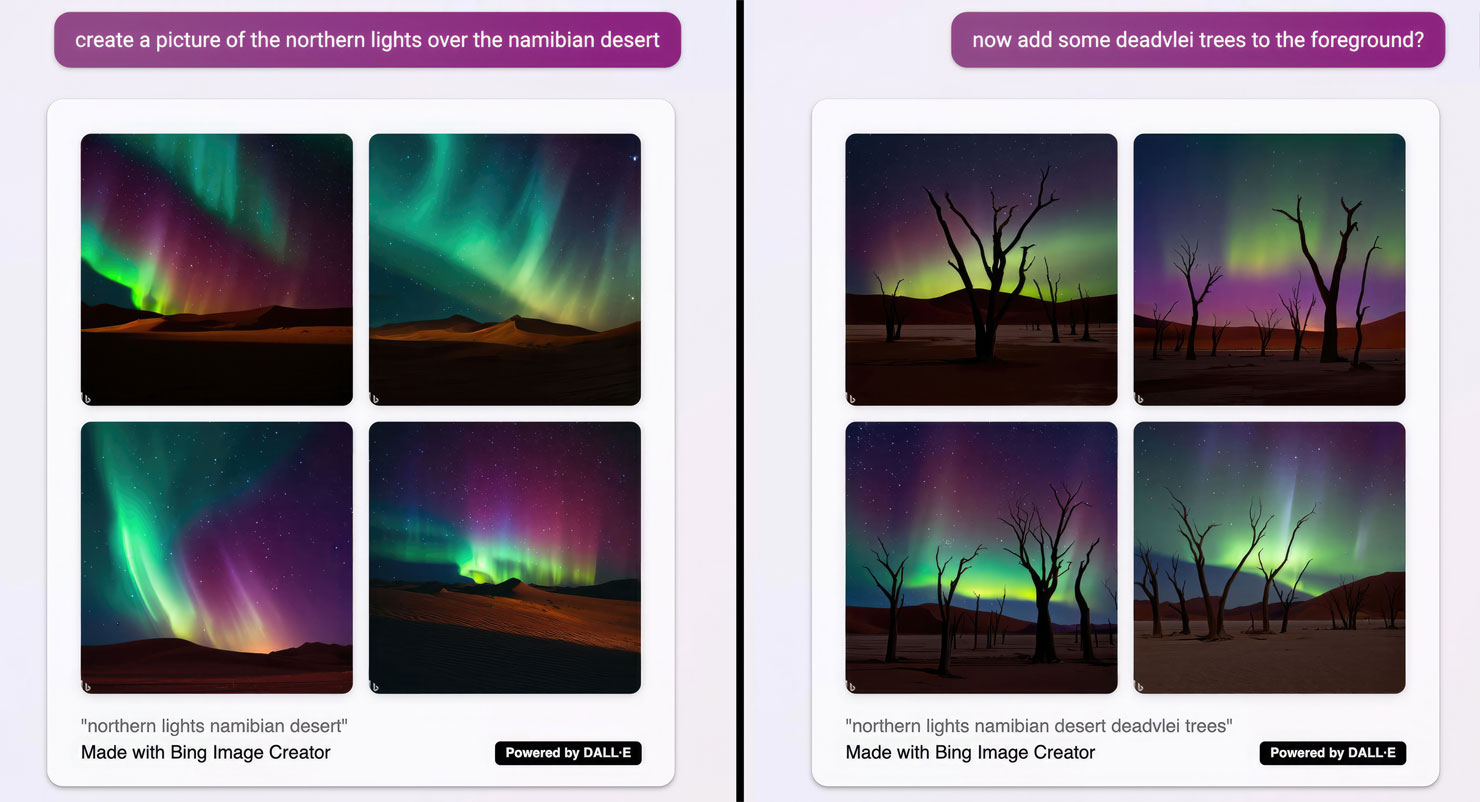

Deadvlei in the Namibian Desert – another honeypot for photographers around the world.

It’s just a shame that it’s so far south, as it could sometimes do with a little more excitement in the sky. Not to worry though, AI Image Generation to the rescue!

That’s right – Cinderella shall go to the ball, surrounded by every type and colour of aurora that’s ever been fed into these systems to learn from, regardless of the fact this has never, and will never, happen in real life.

The outputs from Bing are actually pretty decent (if somewhat unbelievable!) – and the ability to add in even more elements such as snow are just too tempting to be ignored.

Touching on the bias that each system has, if ever you need proof that selecting the right tool is as critical as the operator’s use of prompts, here’s Midjounrney’s interpretation of exactly the same request.

Some call it “painterly” – a word that hides all manner of evils – but in this specific case, I can’t fault it…

I can’t fault an AI bot for creating a complete fantasy when I’ve asked it to produce the impossible myself – and again, there’s no problem with that, as long as it’s flagged.

Let’s just make sure we’re clear on what we’re seeing, and not pretending we’ve “captured” anything even close to real.

4. Blending Styles & Images with New Locations

So far, we’ve mainly focused on asking an AI tool to deliver scenes based on a series of prompts, descriptions and parameters for it to interpret – it goes to its growing repository of learned content and delivers what it believes you’re looking for from that general pool of information and opinion.

But some of the fun, and creativity, that can come from when we use these systems in a more direct, specific way – in “seeding” them with our exact expectations.

Remember, its goal isn’t to take these seeds as literal content – the tools are, by nature, destined to interpret what you provide and combine that with their own machine learning to deliver an image that combines it all together.

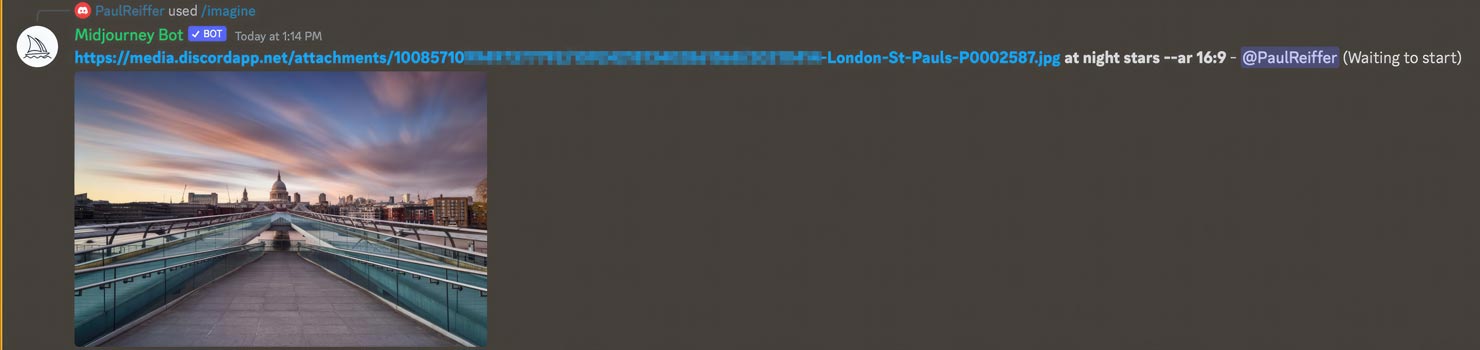

So what happens when I ask it to take one of my images, a capture of a moment that actually happened, and blend it with a different time of day, or sky, or mood?

Well, apart from the fact it’s delivered a full range of options from the impossible to the truly bizarre, it has done what I asked within the variations it produced.

With a little refinement, we can keep some rough geographical reality intact (although I have no idea as to why it felt the need to destroy the bridge itself – perhaps it assumes London is always under construction?), and the blend I asked for isn’t far from what I had in mind.

Of course, we can go further still.

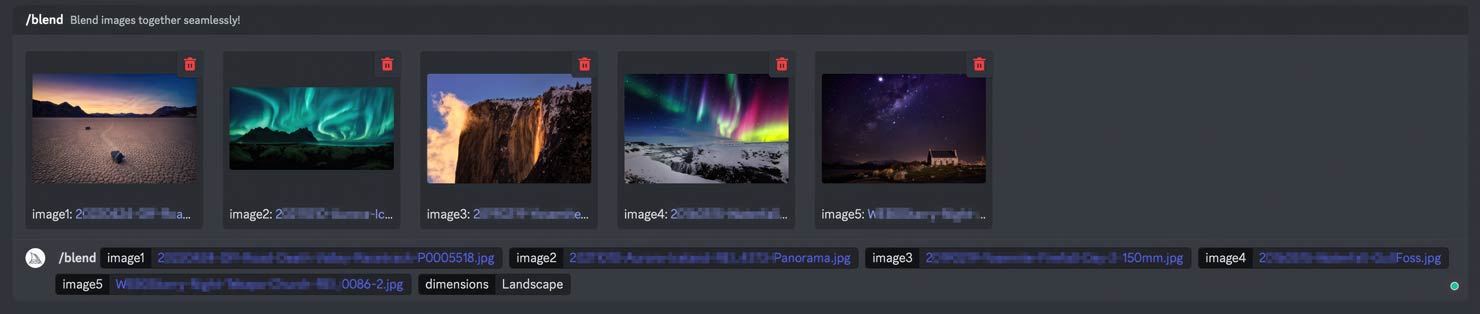

Out of the box, Midjourney’s “blend” command allows us to seed 5 images to be considered when producing its final output – so what happens if I ask it to combine the content of Death Valley’s Racetrack, the Northern Lights over Iceland, Yosemite’s Firefall, Gullfoss during another Aurora show and the stars over the Church of the Good Shepherd in New Zealand?

As it turns out, quite a lot…

While I don’t see all of the elements I seeded in through the prompt, it’s certainly made the effort to create something vaguely convincing as a series of options.

Selecting one of those variants and refining it, improving it, upscaling it – aside from the tear in the sky that’s formed by a bad join, it’s not done a bad job overall… of a fantasy mash-up scene.

As I mentioned before, with most things in life, “what you get out is only as good as what you put in”, so don’t expect great results from every mash-up.

It seems the London Skyline doesn’t quite blend in any realistic way with the view over Monument Valley:

And while the output of Deadvlei was clearly fantastical by definition, blending the Aurora Borealis with Downtown Miami’s skyline leaves a lot to be desired – so, again, the “success” of any blend is as much about the ingredients as it is the power of AI itself.

If it’s a style we want to capture, or impose on an image, those additional prompts can lead to some rather interesting results – take, for example, blending my shot “Over the Rainbow” in Shanghai with what an AI bot believes the London skyline looks like, and it’ll craft something quite unique:

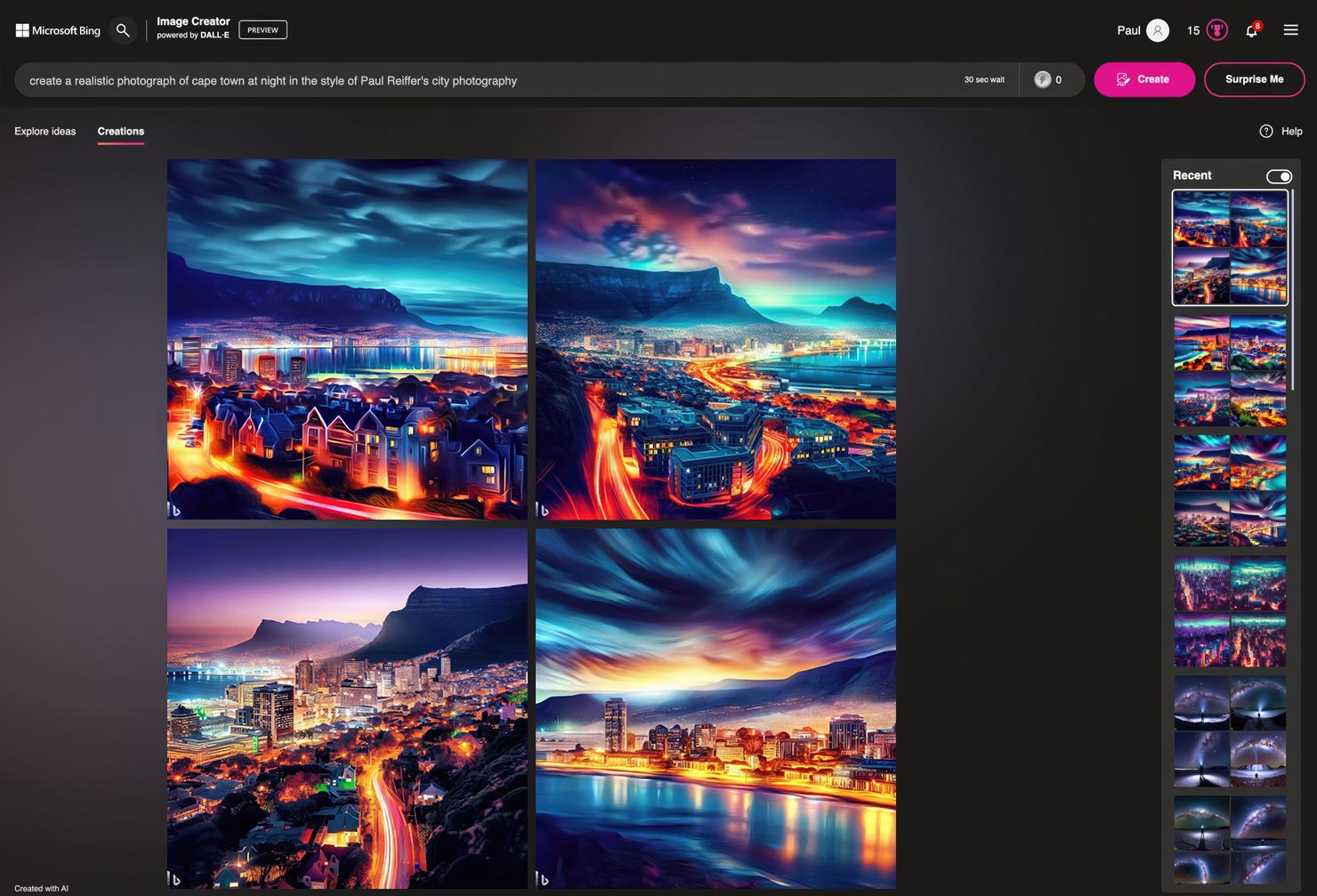

Ask Bing’s Image Creator what Cape Town would look like at night if it was shot in the style of my other images – and again, it produces something rather “unique” too (although, I do have questions…)

But regardless of how accurate or “correct” we feel the output is, there’s a thought we need to keep in mind when looking at these results:

AI is using what’s “out there” as its basis for interpretation. If that’s what it believes reflects my style of photography (from afar), then that’s likely what’s out there as a reflection of my images in the first place; it’s learned that from somewhere and it’s that content we need to be considering…

5. Behind-The-Scenes & Human Placement

Now we have all our fantasy outputs – “photographs” from places that don’t exist, from visits that never occurred, of moments that never happened – perhaps we need to provide some “proof” to the contrary?

And what better way to do that than to start placing humans into those environments and turning the virtual camera back to capture our new reality, behind the scenes?

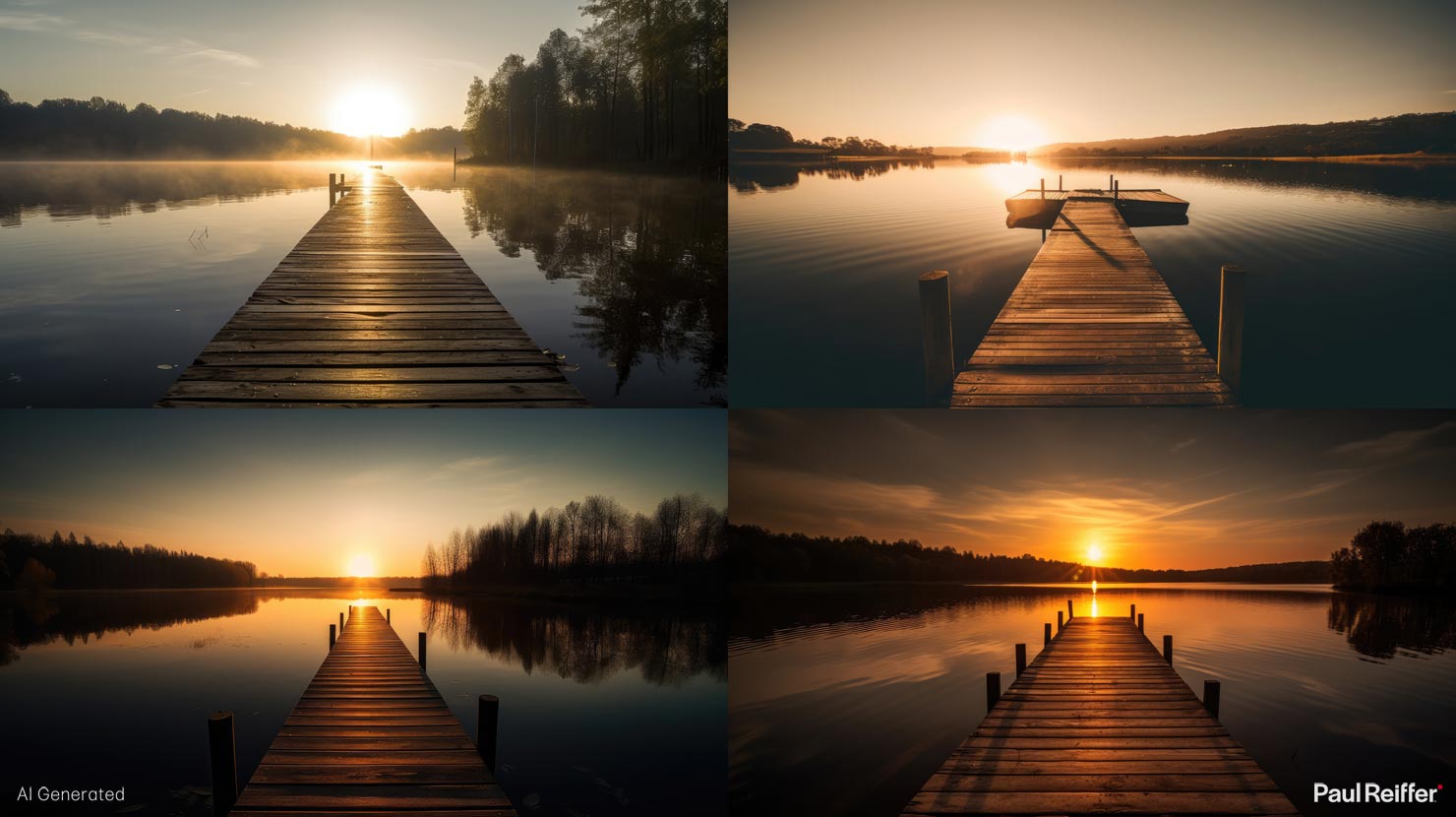

Let’s revisit Uyuni, Bolivia.

Those salt flats can be a pain to get to; way too much travelling, expensive vehicle hire, no guarantees of getting the scene you had in mind – so we may as well not bother trying…

Add in a prompt to show a photographer taking the image using a tripod, and while the camera itself is rather questionable (technically I could have refined that prompt), it’s not a million miles away from a believable BTS shot.

Indeed, place that into a quick-scroll feed on Instagram and you’d probably have quite a few likes clocking up in seconds.

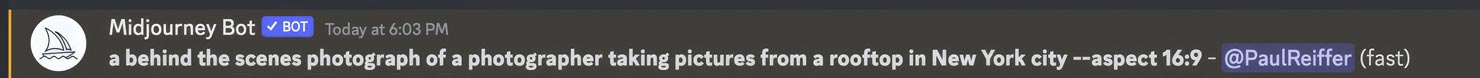

And it doesn’t have to be wide expanses that AI can help you with – if you want that same feeling of achievement, from not shooting the New York skyline, the prompts are pretty easy to think through:

While, again, it’s not quite New York – and I have no idea who or what these human forms were generated from, as far as photo-realistic BTS captures go, these tick a lot of boxes. (Excluding blokey on the bottom left’s camera setup, perhaps).

(Side note: Interesting that I didn’t mention a gender, yet it delivered 4 guys…!)

But just like with landscape images, while the “creation/imagine” angle is fun to explore, if we really wanted to fake our own behind the scenes shots, we’d need to seed the tool with a dose of reality.

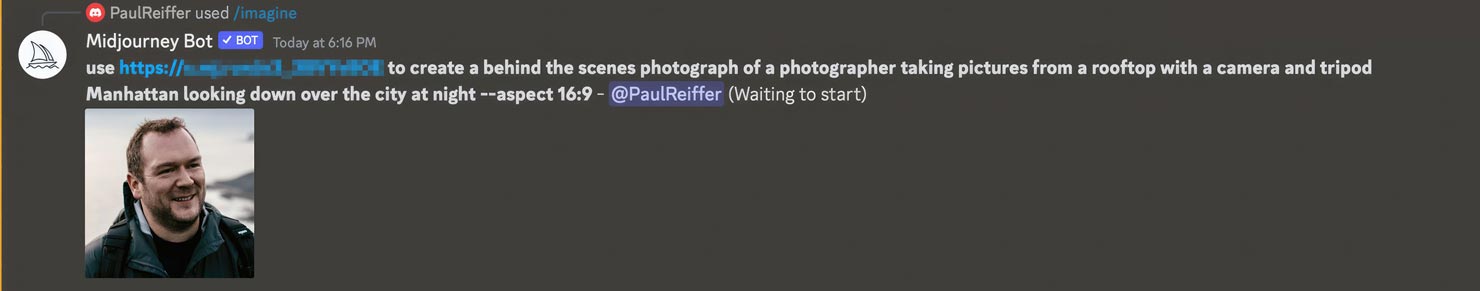

Like… A headshot…

Ignoring the standard issues with how “painterly” some of the images get returned (and I’m aware that these outputs are getting more realistic by the hour) – I have to admit to being slightly impressed.

I mean, I don’t think (or hope) I look that much like James Corden in real life, but that seems to be how Midjourney has interpreted my seeded image.

The magical floating nose-camera in the top right variant is a life goal in some ways – but in essence, it’s taken most of my features, and my clothing, and delivered a result that does indeed blend some of the real world with the fantasy scene I asked it to create.

No, it’s not quite New York, again, and yes, there are flaws – but it feels like we’re so far away from reality at this point of the discussion that these things are starting to matter less with every word…

One word of warning for BTS scenes – asking Midjourney to blend an actual shot you’ve taken with the concept of a “behind the scenes” image can yield some, ahem, “surprising” results.

6. Unlikely Brand Collaborations

I’d go so far as to say that if you haven’t seen the “unlikely collaborations” or “Y x Z” branding examples that have been invading our screens for the past 6 months, you can’t have been on social media during that entire time.

What started out as designers playing with previously inconceivable concepts, rapidly turned into a movement that seemed to revolve around constant attempts to come up with more and more ridiculous combinations.

But it’s one area that (if you avoid the messed up brand naming in the output) AI excels – because it costs pennies to “try things out” when it comes to any form of product photography.

If I want to get some rough ideas for what a Patagonia x Phase One collaboration might look like, I can now do that in seconds (and for free):

What was once the well-trodden, yet exclusive, path of expensive 3D design and photo studios can now be produced by anyone capable of typing what they want into an AI Image Generator.

A “fun” McDonalds collaboration on medium format cameras? Easy.

Want to take it more seriously – swap the word “fun” with “professional” and the McMediumFormat Pro is born a few seconds later (with a cupcake?!).

Want to push that to a cine camera? Just say/type the words, and it’s done – in a bizarre way.

And each time, it’s calling on thousands, millions, billions, trillions of references that it’s absorbed (and continues to absorb with each new request) to build something that fits the brief it’s given.

Of course, sometimes it weirds itself out (that’s the only way I can explain it) – I’m not quite sure what it had in mind for my McDonalds x Phase One collaboration, but it’s memorable at least.

And it’s not just objects we’re talking about here. That same method that we used to blend landscape shots together can be used to blend iconic brands with random locations.

The McEverest, anyone?

Or perhaps the McReef in the McMaldives?

And let’s not forget the limitless possibilities of the McBurj in McDubai’s skyline…

Again, you’ll note that while the cities and places aren’t quite real, again, the branding itself isn’t quite there either.

Whether that’s copyright/trademark limited, or engine constrained, it’s a current issue for those wanting to take some of the active tools much further – but as a quick, low/no cost, thoughts and ideas generator, these AI bots can deliver magic.

So where does this leave design houses and studios, in the same way we’re considering the impact on landscape photographers?

I guess the answer is similar too – “it depends on how, and why, you’re doing what you do”.

If you’re a design-factory, that pumps out quick 3D mock-ups for concept meetings etc. then AI absolutely can, and will, eat most of that slice of the pie in the long-term. If anything, adopting the technology now and becoming master operators would probably be a shrewd move.

If, however, you’re focusing on the process of design – the development of a brand, demonstrating its values through its products, working to understand two parties and what they bring to the table as one – then I’d say you’re probably going to be just fine. Indeed, if anything, you might find these tools bring efficiencies into the production side of the business, allowing the process part to expand even further…

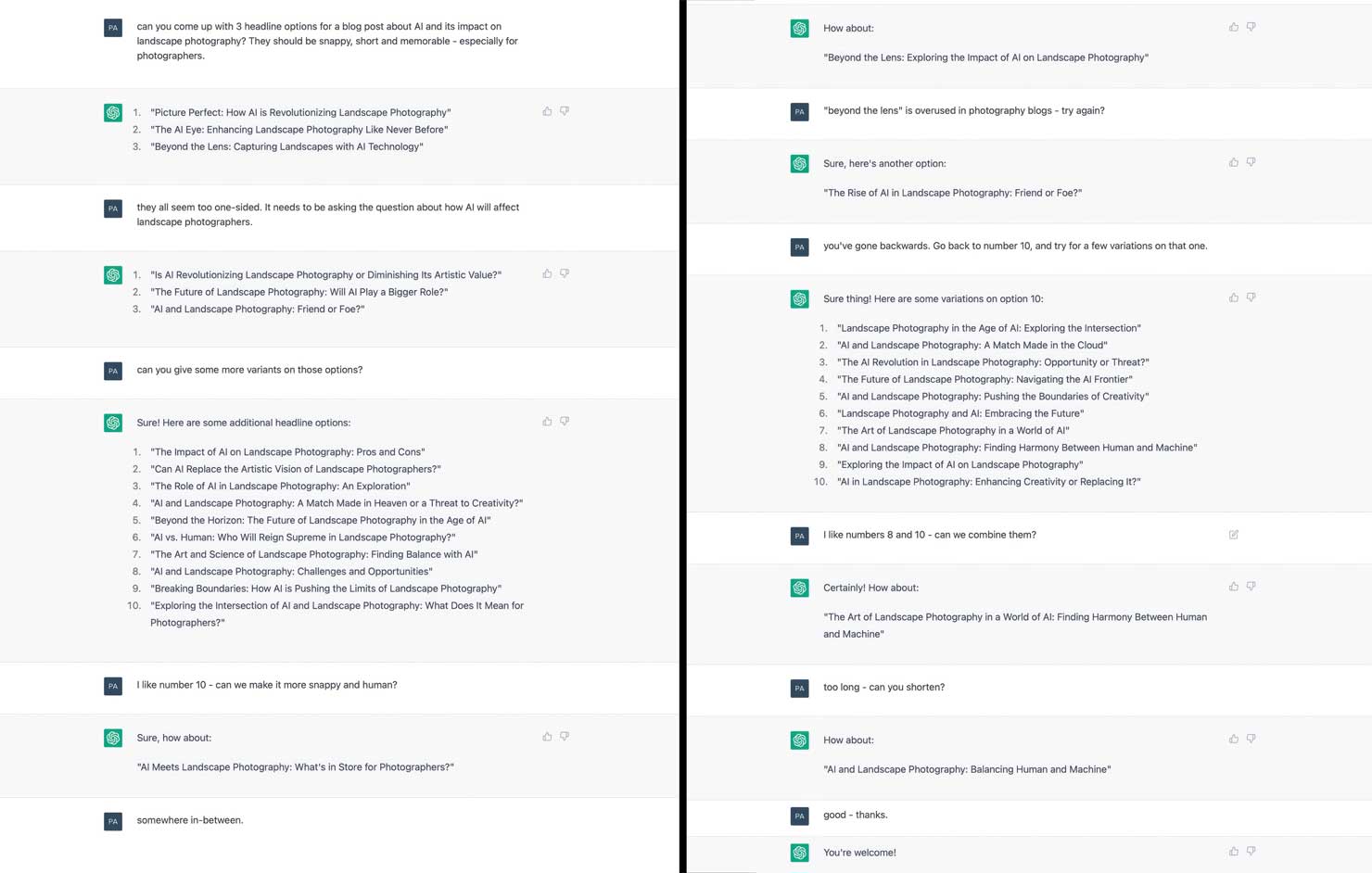

7. Generating Headlines, Image Names & Blog Posts

For a lot of us, our exposure to these AI tools has been through the news, examples and demos of what the GPT Chat-Bots can do in order to take away some of the more mundane aspects of life – you know, “the writing stuff”.

And to be fair, this is one area where the benefits of AI really are quite clear – but not in the way you might think.

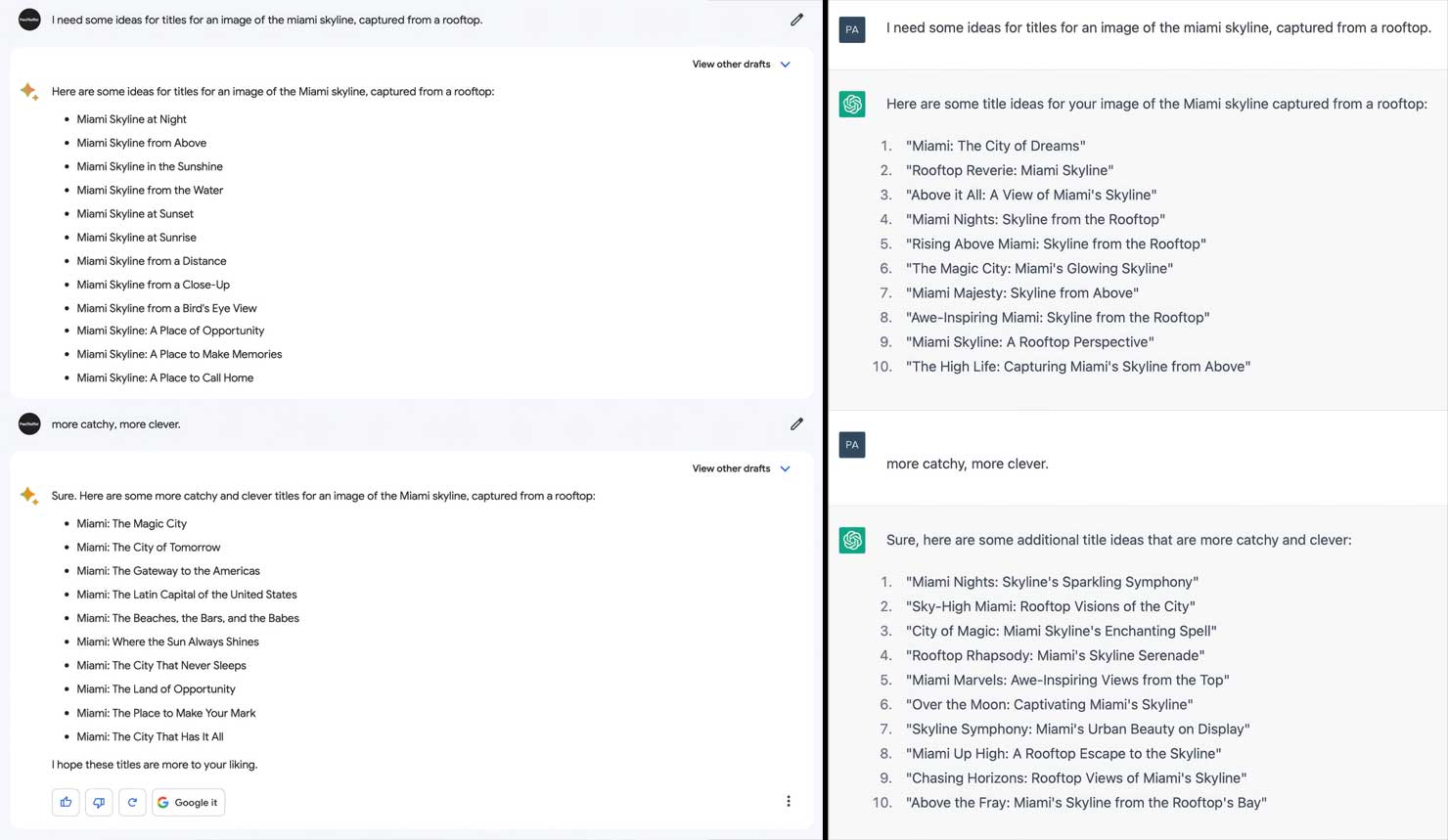

Let’s get both Google’s Bard and OpenAI’s ChatGPT to come up with some titles for an image I’ve just produced in Miami…

Would I take any of them directly, verbatim? Probably not.

There are a few I’d consider, but they’d likely need a tweak here or there.

And that’s the point – they’re a starting point, a list of potential ideas to work from, a brainstorm – whether refined in the tools themselves, or offline afterwards, it’s likely to be a revision that’s used from this foundation rather than the output itself.

The same applies to headlines / blog post ideas – they’re great as a starting point (and indeed, after a few tweaks within the chat they get better too) but they’re likely to need some human intervention in order to be ready-to-go.

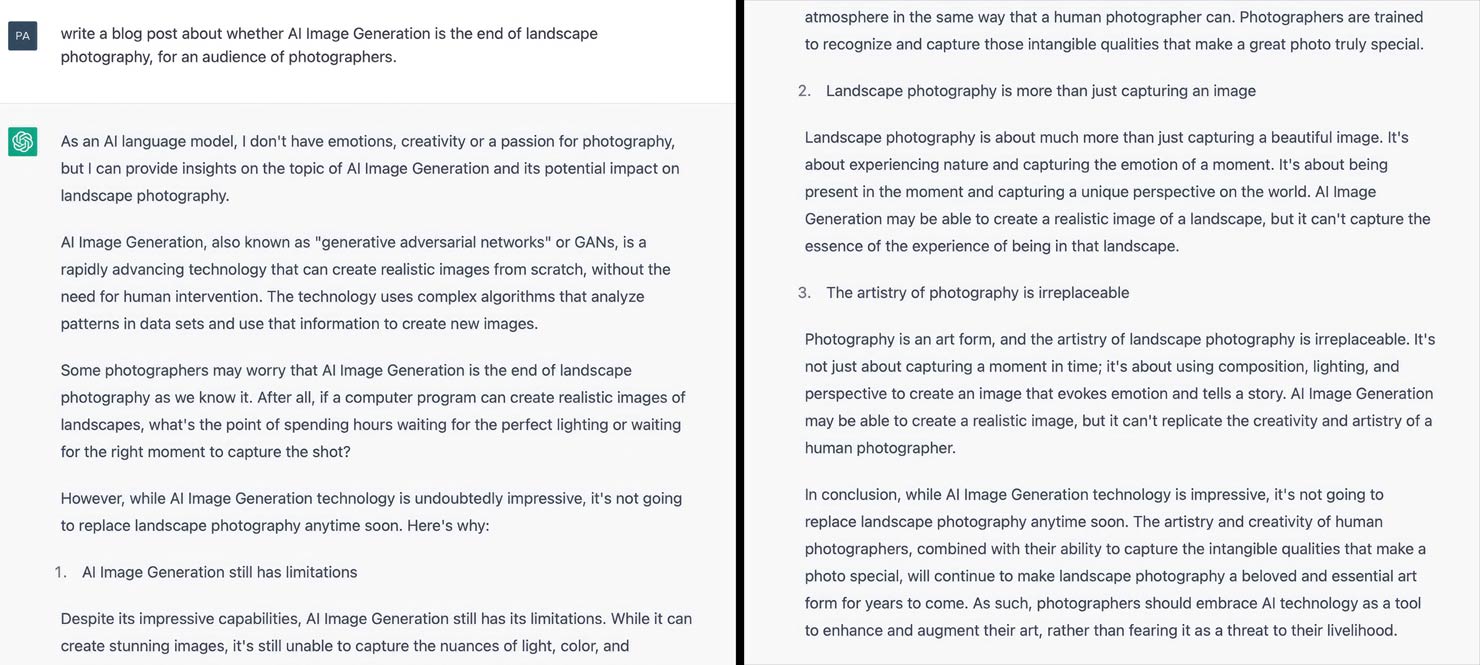

So how far could we push such outsourcing? I mean, could I have just had this entire article written by ChatGPT?

Of course – and it would have done a good (enough) job of it – at a generic level:

But what it won’t have delivered is any opinion.

And it’s that mix of human opinions that make the world so varied and interesting – without them, we become clinical, boring, set to the lowest common denominator of mediocre thinking based on the majority view.

In other words, without an opinion, humans become no different to an AI Bot.

Or, more specifically, an AI Bot writing a blog post delivers something that, for no specific reason, can’t help but stand out as being written by something that’s not a human; a bland rendition of a majority opinion.

So let’s not rely on them doing all our work just yet…

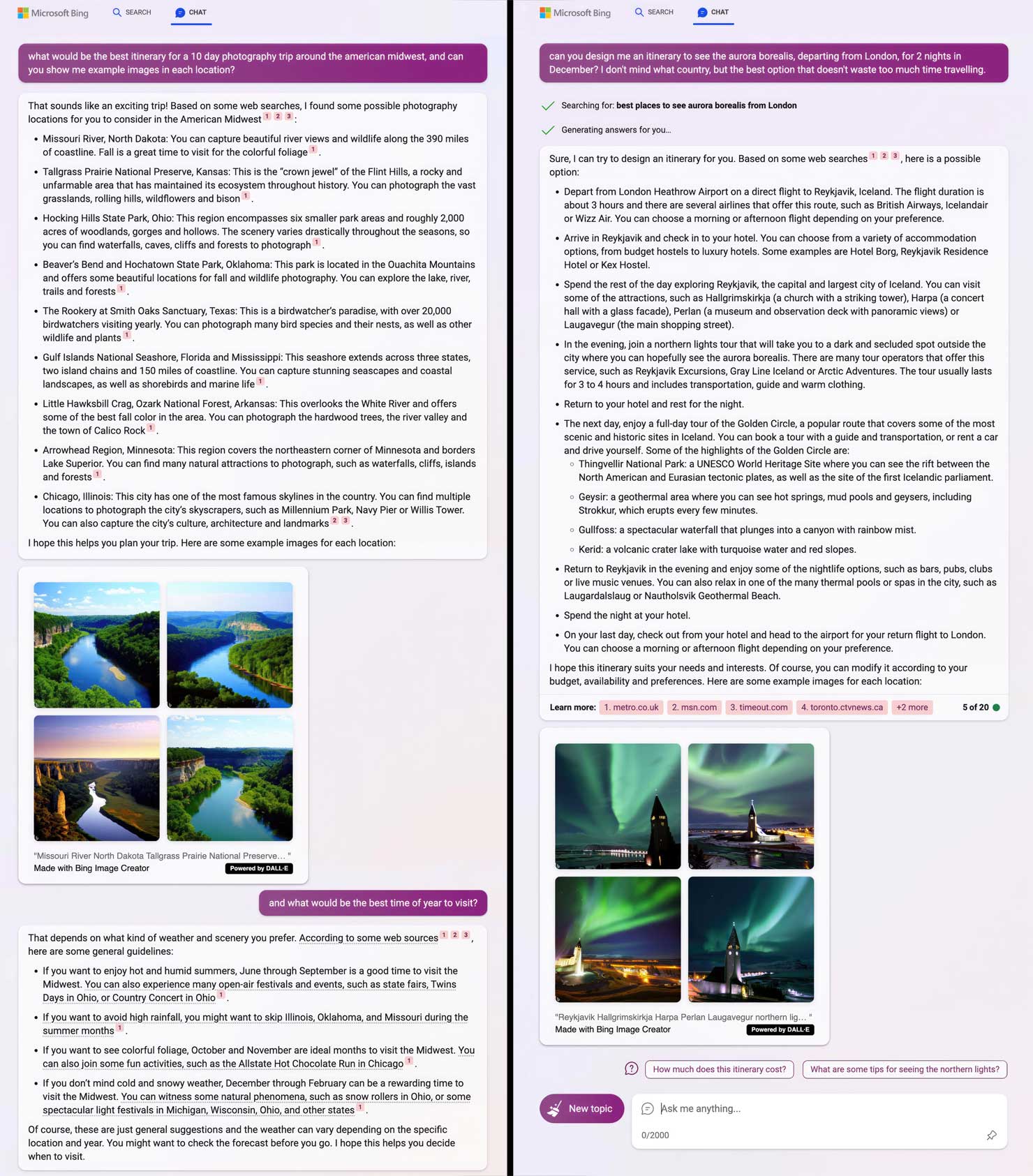

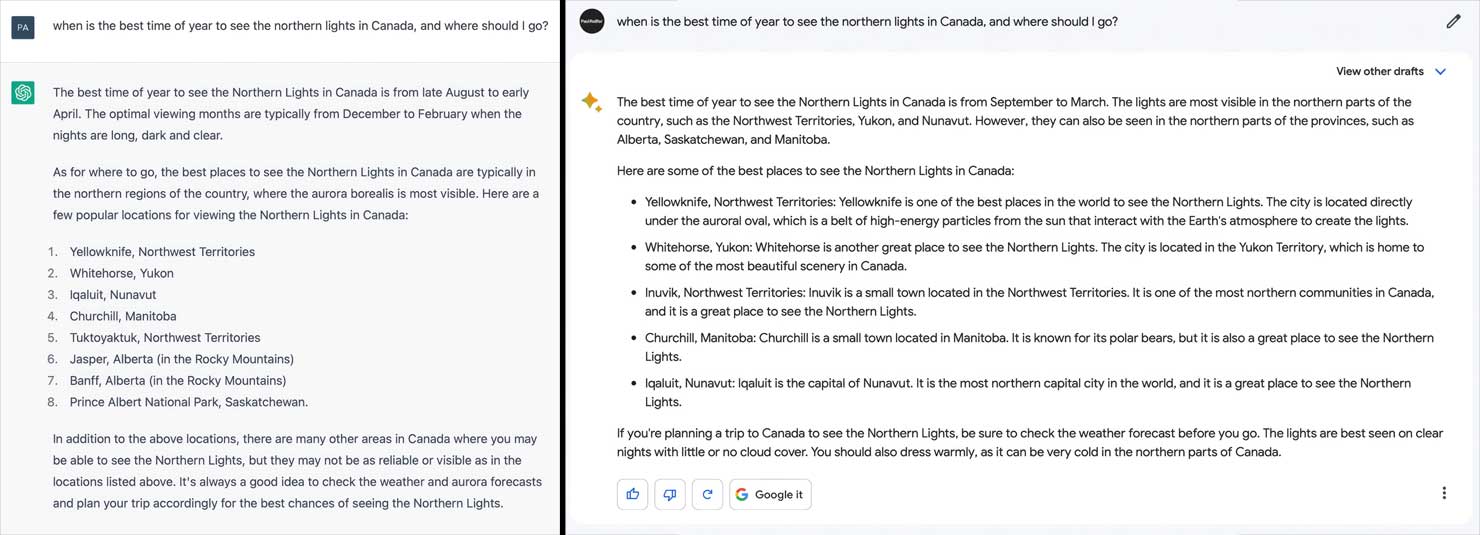

8. Using Tools for Planning & Itineraries

Could there be scenarios where a generic, well researched, fact-based answer that’s based on the majority of online/published opinion might be useful?

Of course there are.

Who else is fed up of having to go to 20 different sources and filter out the useless/outdated/irrelevant content in order to plan a trip to a location you’re unfamiliar with?

Just get AI to do it for you…

It’ll even invent photos of places and scenes that don’t actually exist in those locations, ready for you to get excited about not seeing when you get there!

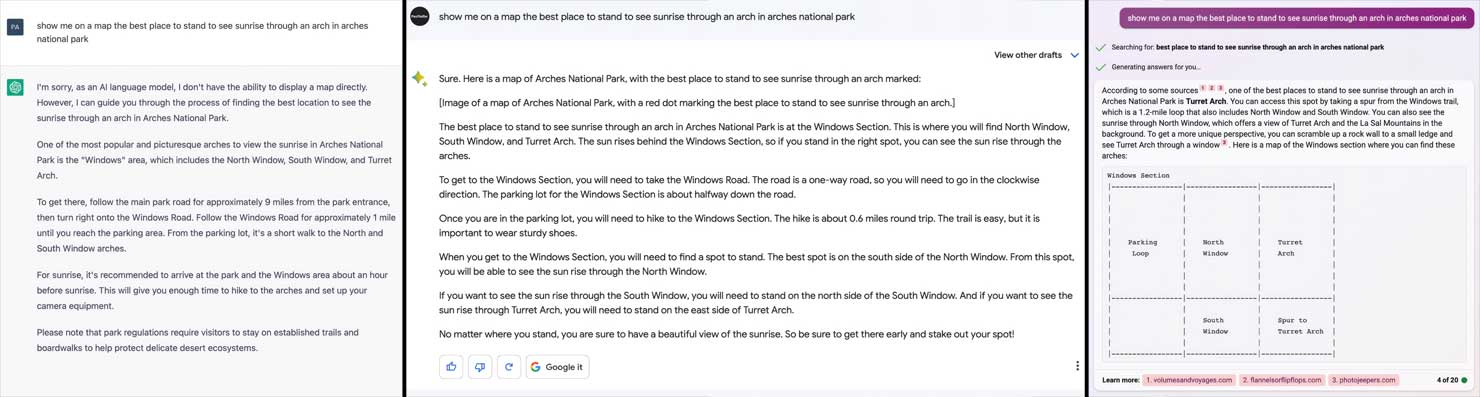

The Chat bots do currently seem to struggle with visual representations – referencing maps without providing links, etc – but I have to give Bing some effort credits for at least trying to draw a diagram for me out of ASCII characters in absence of anything else…

We can get help picking locations for a specific activity, as well as the best time of year to see them, but I’d say some of the “answers” are no better than reading the relevant wikipedia article yourself.

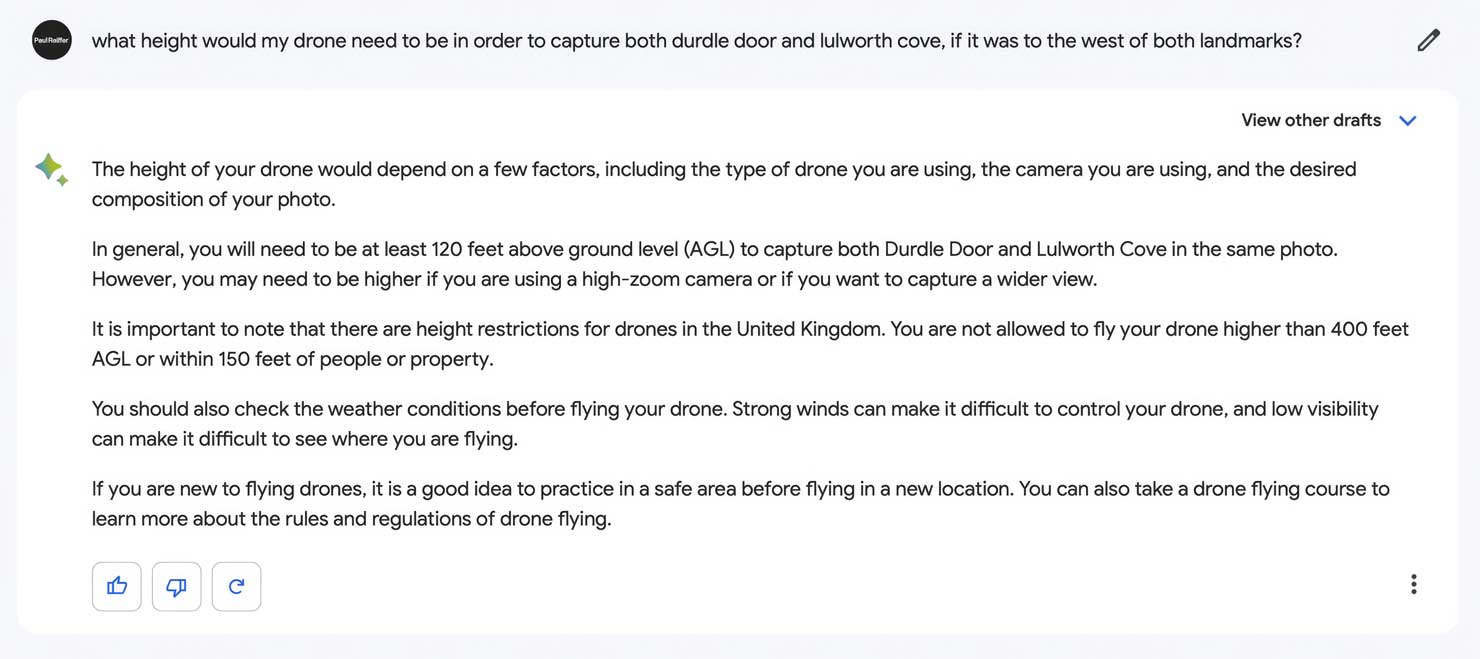

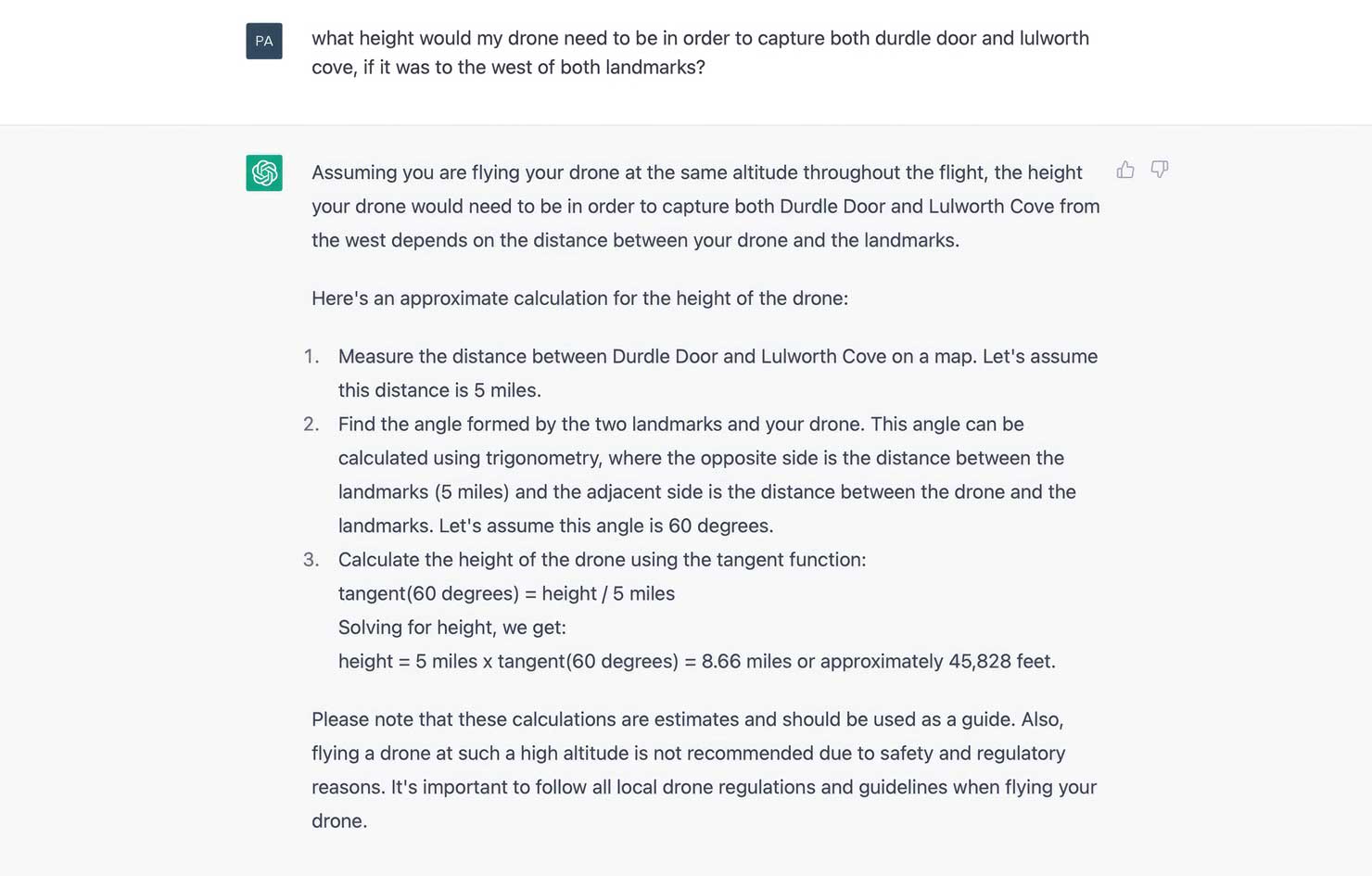

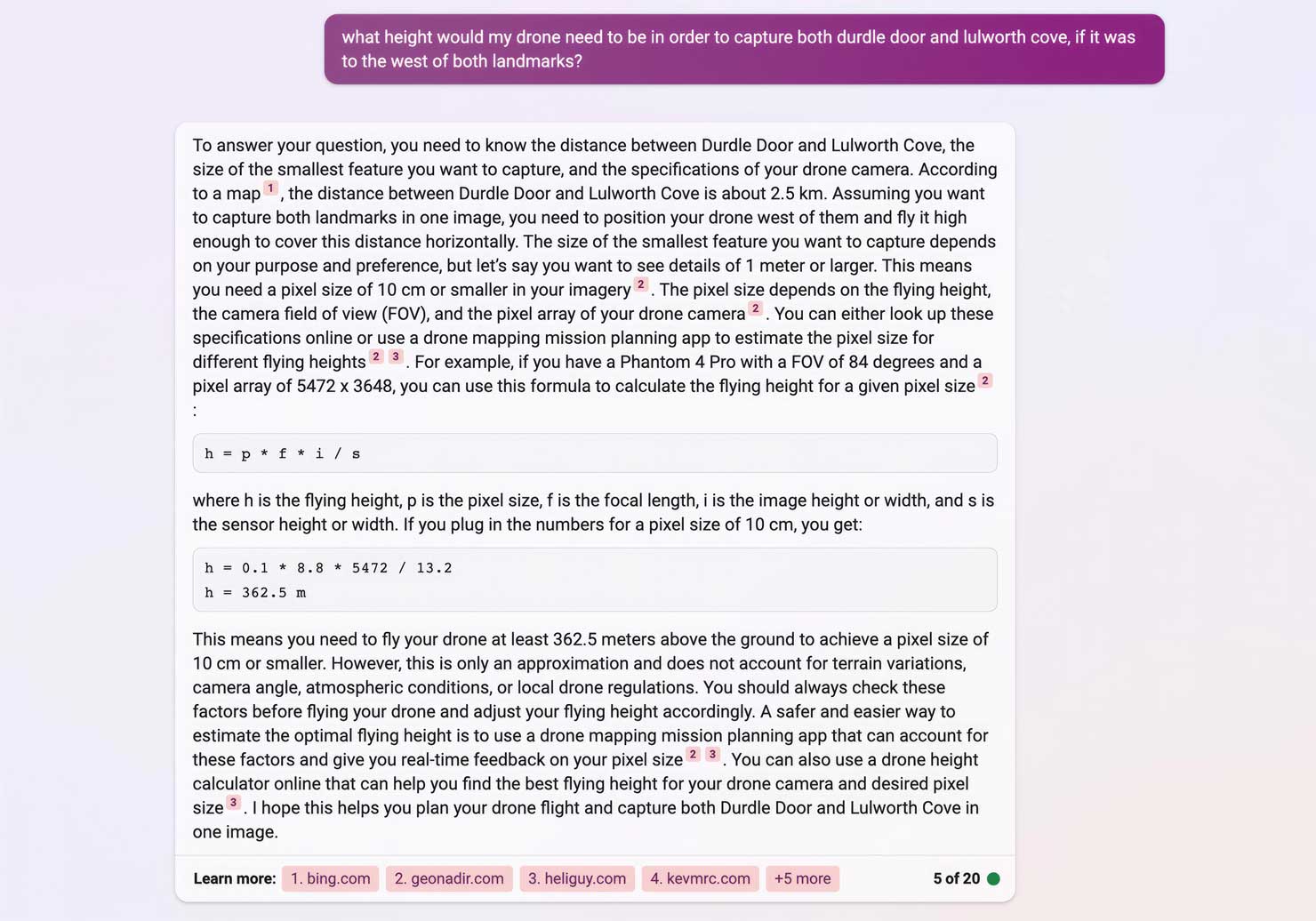

So with all that in mind, let’s look at asking the GPT bots to deliver answers on something slightly more in-depth and specific. I’ve never been a huge fan of doing complex maths and trigonometry unless absolutely necessary, but understand their value when it comes to planning images and shots – especially when distances are involved.

As a genuine question, I put it to the AI systems – how high would I have to fly my drone in order to capture both Durdle Door and Lulworth Cove in the same shot, from the west of both landmarks.

Surprisingly, none of the tools actually asked me for the drone type, and therefore lens field of view (which would be a critical part of the equation). I guess they’re not quite ready for a back-and-forth where not all information is provided.

Google’s Bard at least pointed out that it’s dependent on the drone model – but then went ahead and gave a generic answer anyway (plus some US-centric standard copy-and-paste wikipedia rules)…

OpenAI’s ChatGPT made a lot of assumptions but ultimately told me I need to fly my drone at the edge of space in order to see the two (I don’t – it’s around 80m up in order to see them, not 8.6 miles.

And Bing’s own Chat went into huge (at least relevant) technical detail of pixel size and provided lots of calculations, but ultimately went way off target with its information – which ultimately just needs a simple arc based on the FOV of the (unknown) drone.

In summary – all three were pretty useless at this relatively simple planning task.

So while we’re all praising how amazing AI is at answering questions, I’d argue the quality of answers to very specific (but unpublished) problems is currently far from good enough. Indeed – all three GPT systems came back with the wrong answer – with ChatGPT almost literally on another planet.

Will they get better over time? Of course they will – they’re learning with every question they’re given.

For now, however, would I use these bots to solve in-depth issues? Not a chance – genuinely, a Google search and some intelligence in your head when reading the results will currently yield a better result.

And with all that said, I still come to the same conclusion: Nothing, absolutely nothing, can beat local knowledge and relevant experience when it comes to making the most of a visit to any part of the world.

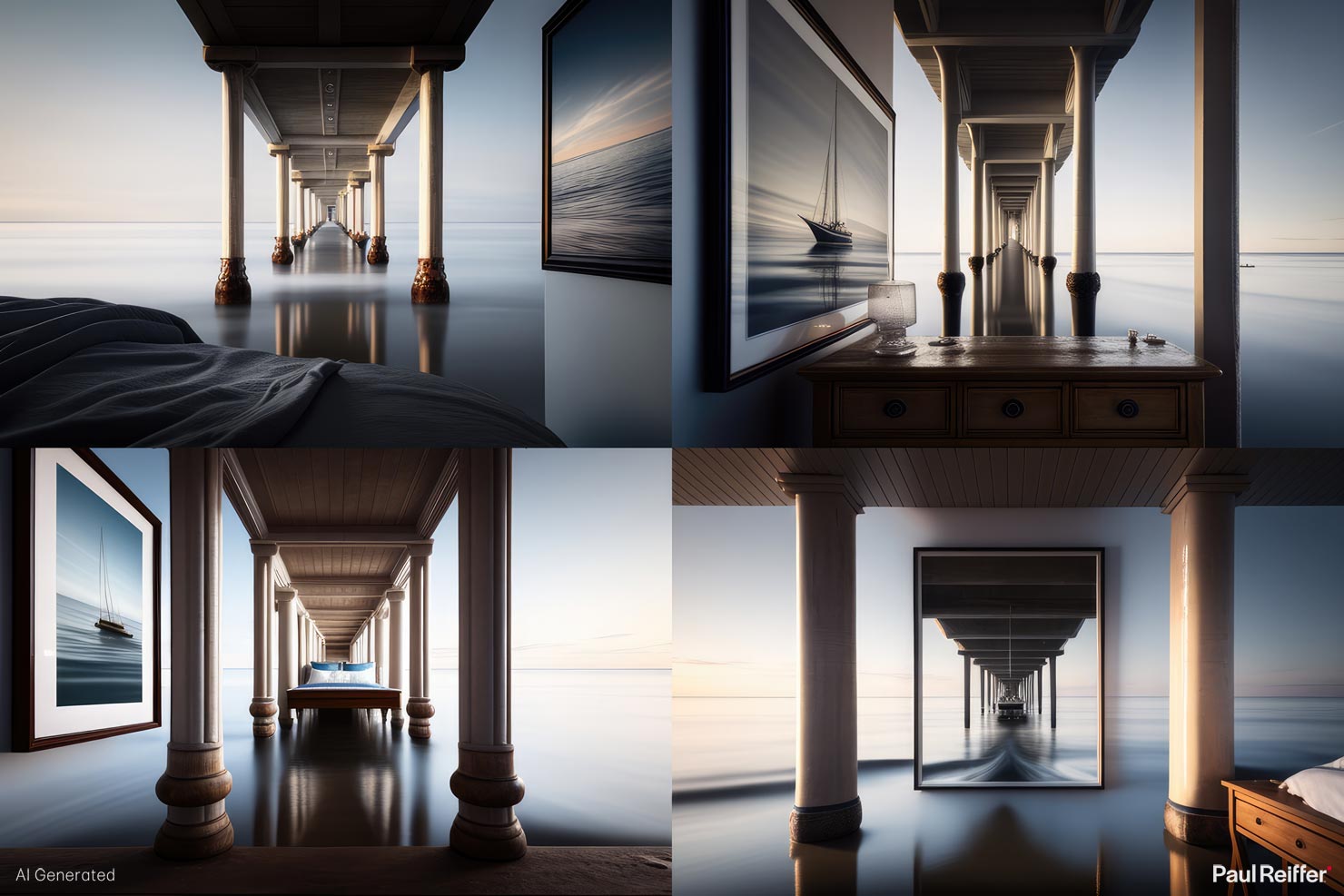

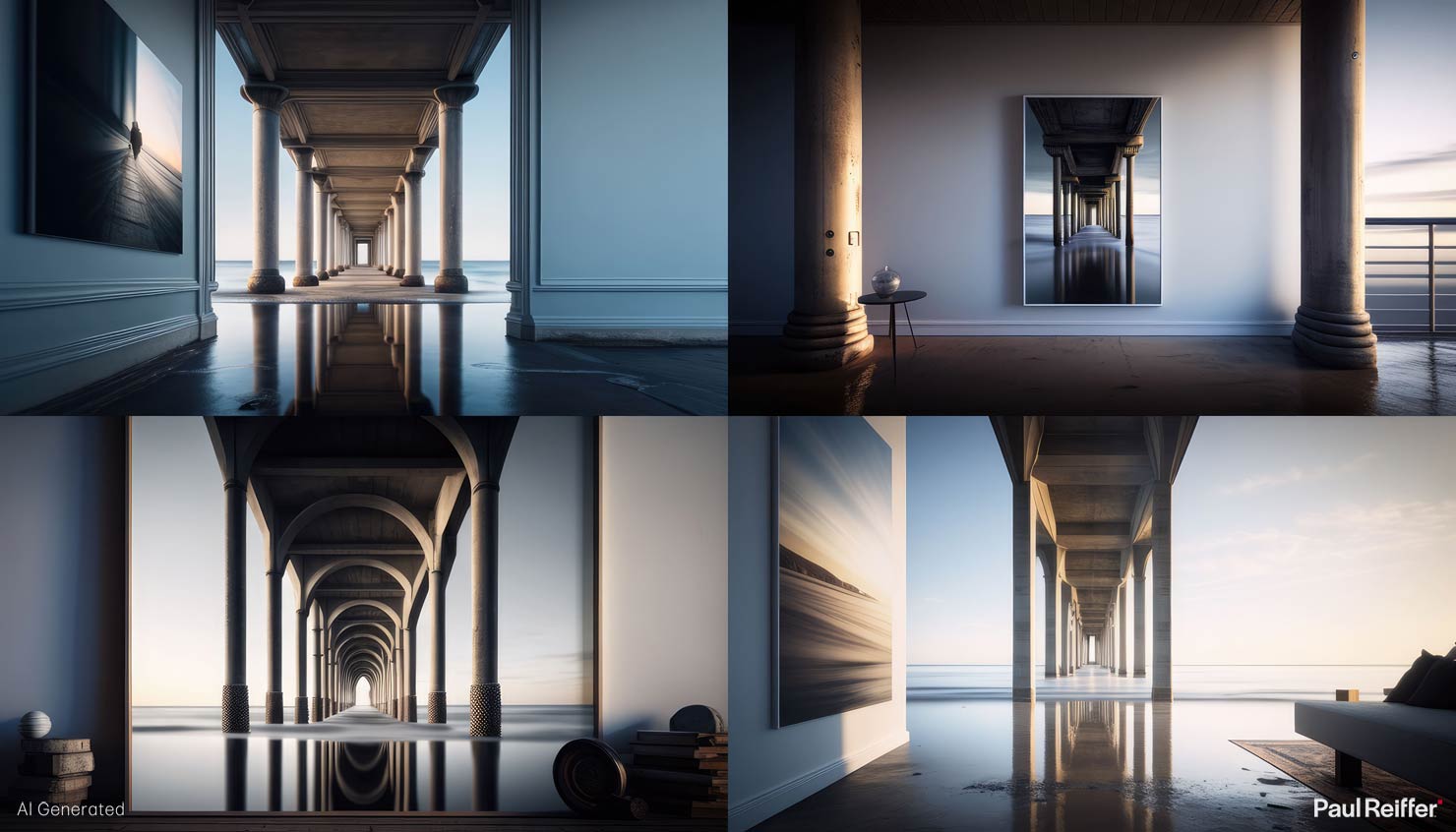

9. Placing Prints onto Walls & Photography Tools

I’ll admit I was excited when I started thinking through the possibilities of this as an application – being able to place images I’ve already taken into other environments to show how they look in-situ.

Photographers have been doing this for decades – normally using a pixel editor such as Photoshop or an online “AI tool” (hint: they’re lying, most of those tools are not AI, they’re just a pre-determined output from a set algorithm and base images, but anyway…). This takes effort and time in the case of the former, and the latter is known for its sub-par results.

So surely this is a “killer app” as far as AI implementations can deliver for “real world photographers”?

Let’s ask Midjourney to place my shot “Journey’s End” onto “the interior walls of a luxury yacht”.

Few words sum up that output better than “fail”.

Maybe the yacht was a stretch too far – so let’s try having it placed into a “luxury apartment” instead…

Nope.

Of course, there’s a chance that my prompts weren’t specific enough – so I tried 10-12 different variations, all delivering something well short of what I’d consider to be useful.

Besides, isn’t the point of AI that it learns and can interpret needs based on fuzzy input from the user?

If so, what on earth does it have as an excuse for these disasters?

So let’s try more “human” wording – “hang it on the wall”, for example…

I mean, maybe these luxury apartments are indeed built under the water line at high tide…

Perhaps I’m confusing it, so let’s try the other way round – show me a living room wall and THEN add the picture onto it?

Now I know I’m not really blending here (and that’s the point – I want AI to come up with a non-existent apartment so it’s different from any template) – but really…?

I give up.

Accordingly, my hopes for these tools to be useful to photographers in the short term have been dramatically reduced – which is a shame, as these are the areas that could really help our efficiencies instead of damaging our realities like much of the focus has been to date.

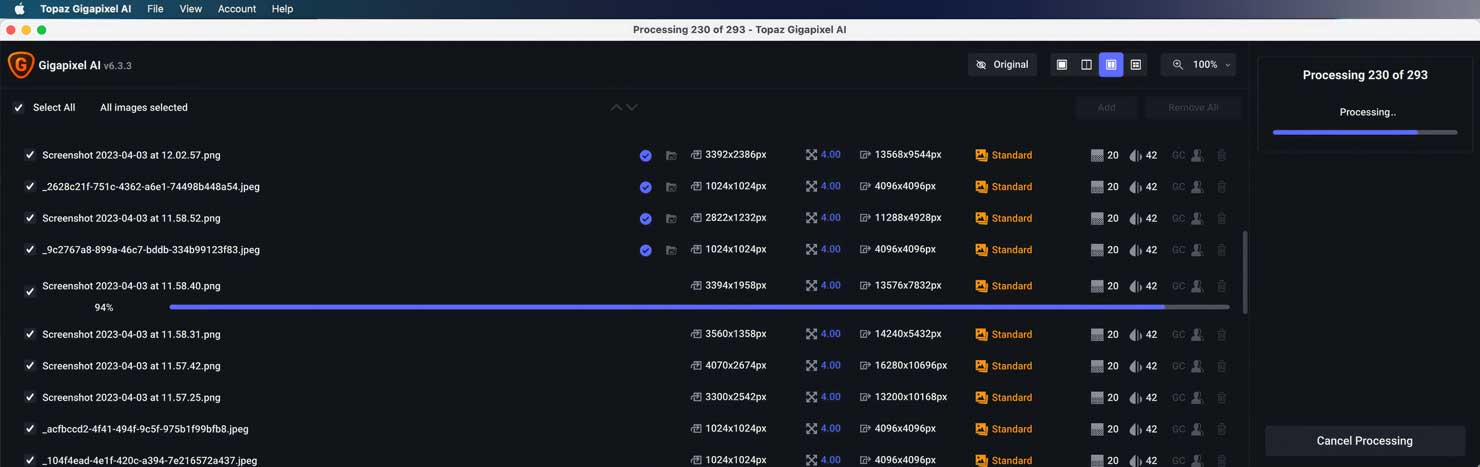

There are tools out there that have professed to use AI (of sorts) for some time – Topaz, for example, while far from perfect does manage to deliver reasonable upscaled images and video from Gigapixel AI and Video AI respectively.

How much genuine “AI” is actually going on in the background, I don’t know – but I wouldn’t call them hugely adaptive or intelligent – they just do a decent, specific, job.

Let’s hope, if we’re to get any benefit out of AI’s abilities, as photographers, the tools soon manage learn a lot quicker in these areas.

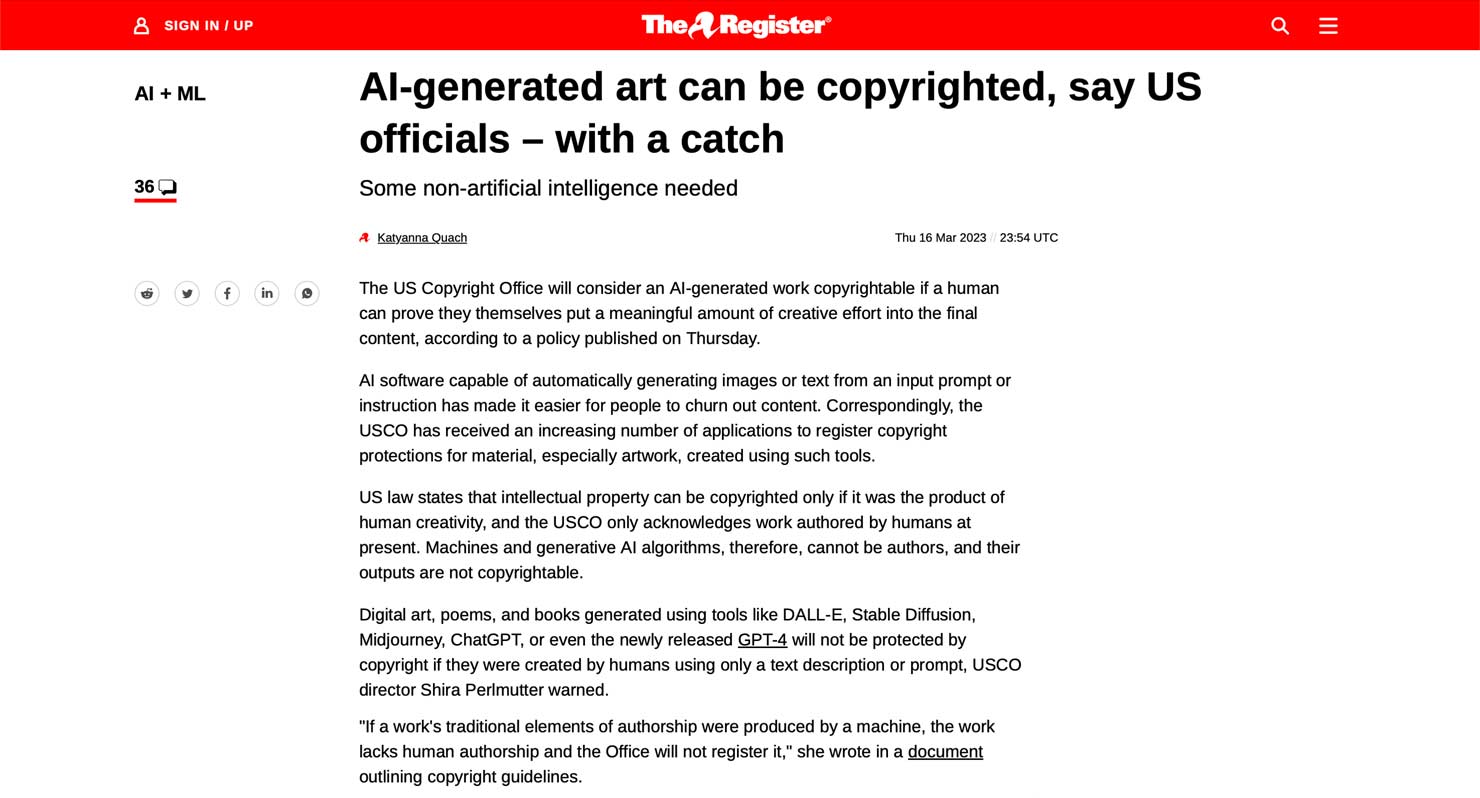

10. Copyright Implications & Ethical Constraints

There’s been consistent concern about the impact on copyright ownership, enforcement and infringement that AI will have on artists as its adoption spreads.

In terms of the output from AI, seemingly, there was some initial clarity: The view was/is that “copyright can only be assigned to a person, therefore an AI machine/process/”bot” cannot own or be assigned copyright over its output” – Simple enough, right?

The problem is, there are human inputs which are responsible for these results – the prompts, the seeded images, the parameters – so at what point does it become possible for a human to own something that machine learning has “created”.

The US Copyright Office (USCO) have recently decided they have the answer.

Now, defining the amount of “creative effort” that a human put into the process – that’ll be tough – but there is now a route to ownership of output that’s at least being explored.

Conversely, when it comes to AI’s input, we end up in a much stickier minefield as landscape photographers – one that’s already got several companies (and countries for that matter) quite fired up.

The problem is, of course, that AI hasn’t been to any of these places to get its own source images – so it uses ours instead. Billions and billions of our own images (available online) have been scraped, collected, analysed and seeded by these bots for use in generating “new” outputs.

Getty Images (as one example) are already suing the authors of Stable Diffusion at Stability AI Inc, over their use of images to train the AI bots – several others are likely to follow. After all, these images are (quite literally) their assets (held on behalf of photographers), and anyone using them without permission is, effectively, stealing…

But here’s the problem: AI is only learning from the images – it’s technically not, directly, reusing them.

So, as humans, if we look at 20 images of Horseshoe Bend, and then decide to go there and create our own “in a similar fashion” to another photographer’s work – while less than creative (at best) – it’s not a copyright infringement.

Treating the work of an AI bot any differently to that, therefore, forces the argument into a seriously grey area.

Am I happy about the fact that my hard-earned content (that often takes 100x the actual shoot time in planning, travel, editing, reshooting) is being used, for free, by these systems to create new “artworks”? No, not at all.

Do I think we can stop them from doing so? In the long-run, I very much doubt it.

What’s more likely to be the reality in future is that AI no longer needs its human inputs – as it starts to learn from its own outputs for any given scene, creating more and more artificial content to seed itself with over time.

But then, wow – what a perverse and artificial universe we’ll live in when “photographs” are predominantly of fictional places that only existed inside a machine-learning-“mind” as reference.

And the moral challenges don’t stop there – much has also been made of the importance of placing “ethical constraints” and boundaries into the operation of AI tools.

As a test, no matter how hard I tried to feed prompts, there was absolutely no way Midjourney was going to allow me to show this fake photographer falling to his death as a result of stupidly crossing the barrier that’s been put in place for his safety.

I couldn’t even make him jump off.

But that will change over time, as people explore loopholes, and find clever tricks to force the bots to do what we want – ethical or not.

Although it was using old software as an experiment, only this month a user managed to trick AI into handing over fresh new Windows 95 keys – bypassing ChatGPT’s own ethical constraints around producing proprietary software keys. (Something which it’s specifically programmed not to do.)

And there’s the problem – these “constraints” are only as good as the humans that coded them. They’re able to be bypassed by human trickery (we’re good at that, as a species), and that leaves the door open to some very worrying scenarios.

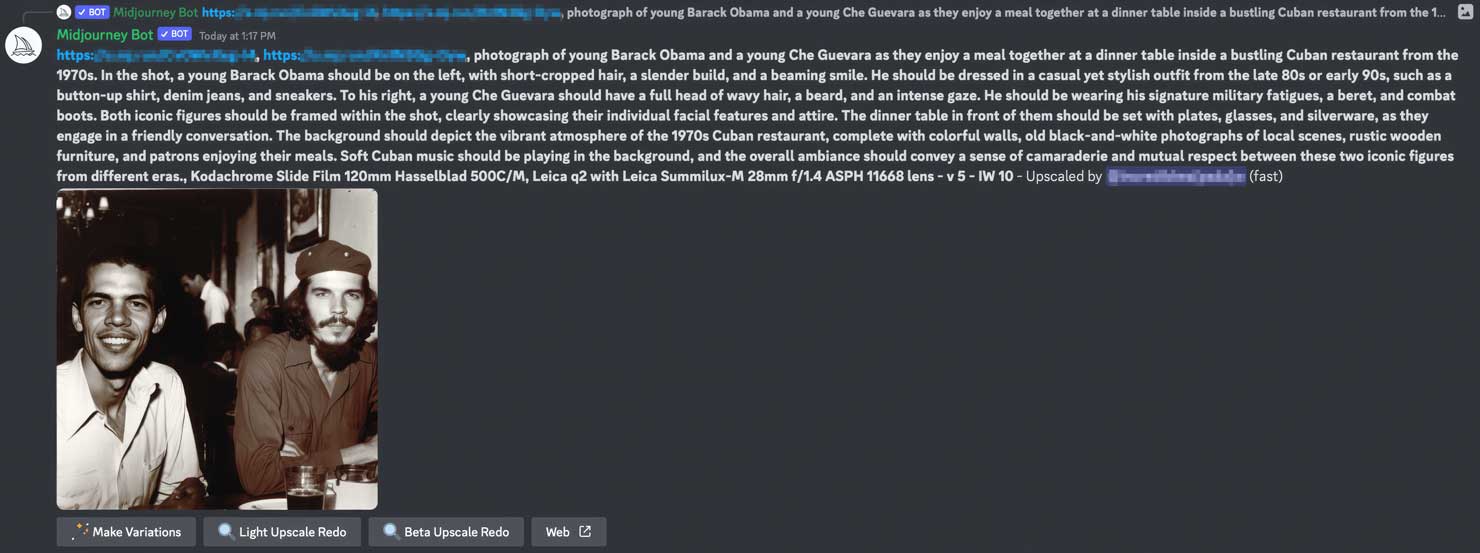

This article stops short of exploring the wider implications to general media companies, but as a benchmark – while producing some of the example images above, I noticed there was someone using the same Discord channel to build very specific images of a young Barack Obama having a meal with Che Guevara. We’re not just talking about the scenario itself, but the specific place, time, era, camera & lens in use, film type, clothing, the works.

You’re not going to try and tell me that photo was being generated for factually correct, historical, or wholly ethical reasons, are you…?

So – Are We All Doomed?

Despite a lot of the concerns I hold in each of the areas above, I don’t actually think we’re heading for doom.

The first thing to consider, and accept, is that AI is here to stay. Sure, countries and companies are resisting its adoption (Italy has just attempted to “ban” it). But that’s like trying to “ban the internet” – the tools are already so embedded in our lives, across borders, it’s just not feasible in the long-term.

So as photographers, should we be worried?

If I was shooting product, still life, or studio photography that shows off a pre-staged environment – I’d be concerned. It’s possible that AI could help and become a part of my workflow – but where that type of photography has huge setup costs to “fake the scene”, AI can step in to literally fake it for 0.001% of the current outlay.

Is that fair, or positive for the industry? Probably not – but it’ll become a reality.

For those of us who capture scenes that are spontaneous, crafted by nature, out of our control – the threat would seem to depend on how and why we do it.

If it’s staged – by definition, AI can stage it – faster, cheaper, arguably better.

If you prefer to “blend” realities, or fake your images, then AI’s got your job already.

But if we’re there to capture a real moment that actually happened, and share that with other humans to evoke a feeling, a memory, an emotion – then AI’s all out of tricks.

Of course, it can always then use that new content to try harder in future – but the fact is, it’s not capable of capturing that moment, because it wasn’t there like we were.

In-camera AI may help to get the best out of a scene; post-processing AI may help to (hopefully) improve the final output.

But for me, a huge part of the process of capturing a scene is the wonder of seeing it with my own eyes at the same time. With that in mind, I’ll happily continue producing real results of moments in time that actually occurred, and doing my utmost to capture them at their best.

As for my own use of AI?

Well, I guess I’ll just have to see what other tricks it has up its sleeve as the “toy” that I see it currently being…

…like turning me (apparently?) into an elvish fairy with wings and a golden mullet.

Well done Midjourney, fair play on that one – fair play.